How are People Interacting with AI: A Vital Research Gap

As communication scholars, we love two things: a solid theory and an intriguing research gap. Gaps in knowledge ignite curiosity and invite explorations of what we do not yet understand. Of course, reviewers prefer we frame arguments around what we do know—and they are right! But, both theories and gaps reveal opportunities.

One such opportunity lies in recent survey data from the U.S., highlighting an important gap that communication scholars are well-positioned to explore: The more people learn about artificial intelligence (AI), the more concerned they are about it.

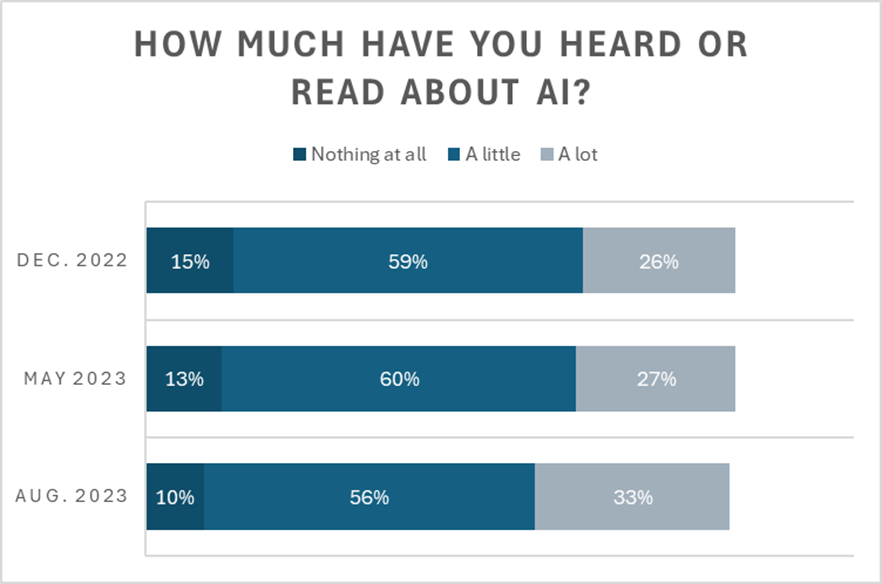

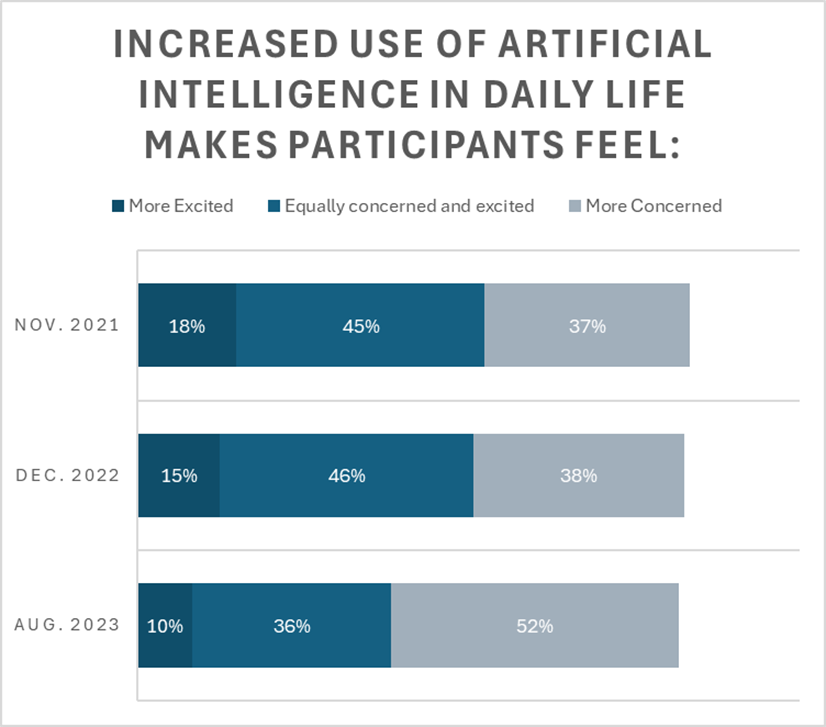

The figures show responses that correspond with that argument, courtesy of publicly accessible Pew Research data:

Note: Data may not add to 100 because non-responses are excluded. All Pew Research data accessed via public reports and https://www.pewresearch.org/question-search/. Pew Research Center bears no responsibility for the analyses or interpretations of the data presented here. The opinions expressed herein, including any implications for policy, are those of the author and not of Pew Research Center.

Clearly there are some trends in this data over time. On one hand, people have heard and read more about AI. At the same time, excitement is waning, and concern seems to be growing. An intriguing empirical gap!

What is going on here? Perhaps we are being exposed to more negative narratives about AI. Certainly there are serious challenges to privacy, unfulfilled promises, and a lack of transparency which prompt concerns—but that is a topic for someone else’s book. No doubt our colleagues who explore socio-cultural approaches to media have more to say on this topic. My own approach leans more socio-psychological.

Complicating matters, exploring the Pew data from December 2022, across 10,000+ participants there was a small positive relationship between how much participants heard/read about AI and how excited they are about it (r = .15, p < .001). How often participants used AI was also positively related to excitement about AI (r = .20, p < .001). Restated, the more participants reported they had heard about AI and the more they used it, the more excited they tended to be about AI. Consider that in contrast to the year-over-year survey data in the figures showing both increases in knowledge and growing concern. What a gap!

Addressing the AI Research Gap with Communication Theory

Could it be that the more we learn as a society about AI the more our excitement ebbs and our concern grows? New technology is often met with fear and resistance. Some scholars call this phenomenon a moral panic, emphasizing utopian and dystopian logics that accompany adoption. But the survey data also suggests the more each person knows or uses AI, the more excited they are about the technology. This finding might also be expected because perceived utility tends to yield usage intentions. If so, the gap closes a bit. It seems if you want to understand how people think about AI you really need to know how people communicate with and about machines.

Several communication theories help shed light on this gap. I am not offering one definitive answer to “What’s happening here?” Instead, I want to introduce some of the most compelling ideas from the growing body of Human-Machine Communication (HMC) research. Don’t worry, I will skip the academic citations here, but feel free to dive deeper through the resources on my lab’s website: https://hmc.ku.edu/.

Media Equation and Computers as Social Actors

One of the oldest HMC theories is the Media Equation which led to the very popular Computers Are Social Actors (CASA) paradigm. The CASA argument is simple: People treat computers (and other complex machines) in a mindless way, leading them to apply social norms from interactions with other people. Ever catch yourself saying “please” or “thank you” to Siri or Alexa? That’s CASA at work.

Scholars continue to leverage this framework to explain how HMC unfolds. But more recent scholarship seeks to uncover boundary conditions: What happens if we are aren’t engaging mindlessly? What cues are most relevant in promoting CASA effects? How do meaningful relationships with AI develop? And how do things change as AI becomes even more sophisticated? These boundaries offer insights into the gaps I identified above. Perhaps users are engaging more meaningfully with AI compared to early experiments, perhaps our relationships with machines are gaining meaning prompting new communication scripts, and perhaps other boundary-conditions offer the answer.

Human-Machine Interaction Scripts

Underlying much of the work on CASA is a presumption people are engaging in a human-to-human interaction script when interacting with complex machines. But what if people apply different social scripts? The folks in the COMBOTLABS have work suggesting people may adjust their expectations of machines before, during, and after interaction, forming novel human-machine scripts. At the core of their argument is that human-human interaction scripts are useful explanations, but they point out it is quite logical to expect new and emerging human-machine interaction scripts that might explain how people interact with machines. We ought to expect new communication norms to emerge as machines become both more complex and commonplace. Again, communication theory presents strong potential insight into the gap. I think we might be bridging this gap a bit!

Machine Heuristic

One of the interesting and overt dynamics in the Pew Research national random sample data is that people consider machines favorable for some tasks (e.g., tracking attendance, monitoring driving) but oppose it for others (e.g., making a hiring decision, analyzing employees’ facial expressions). This finding is articulated in the communication literature by Sundar and colleagues as the machine heuristic. Put simply, people generally expect machines to perform in objective, unbiased, and predictable ways. People also expect machines to be worse at tasks that involve subjective judgment or interpretation. The reality is AI is already doing more than people expected and expanding our definitions of what is possible and acceptable. Perhaps as AI expands in scope and ability, users are regularly recategorizing, rethinking, and redefining the tasks this communication partner is “good” at. Thus, the machine heuristic might offer insights into why people’s expectations about the future of technology diverge and converge with their knowledge and experience using the technology. Mind the gap: I have one final set of theories to share.

Self-Other Perception, Introspective Illusions, and Skills-Biases

Understanding how people perceive AI’s “thoughts” is a tricky business. It is a challenge rooted in a concept called theory of the mind – our attempt to infer what others are thinking. That may sound deeply psychological, but have you ever wondered “why does my Roomba vacuum in that strange pattern?” then you have already given it a try. We often make inferences about both what AI “thinks” and what other people think. Of course, we cannot know what AI “thinks” just as we cannot know what any communication partner is truly thinking, feeling, believing, etc. We call this self-other perceptual challenge the introspective illusion. The manifestation of this introspective imbalance is not new, instead it is a new manifestation of interaction with (machine) others.

Biases like the introspective illusion can be quite useful in explaining how people think about AI. Consider the common view by Americans that AI will change everything, unless the change involves them. Specifically, 2022 data from Pew shows 62% of people expect AI to have “a major impact” on workers in general, while only 28% expected it would impact them personally. This pattern holds when we ask workers about the impact across job domains relative to their own job. The point is simple: People believe AI will change things dramatically but are less likely to anticipate changes prompted by AI will affect them personally.

Another pervasive bias manifests in assumptions that some jobs are more susceptible to complex machine than others. In 2017 Pew surveys asked this directly and 77% of participants said fast food workers would likely be replaced. In contrast, only 20% estimated nurses would be likely to be replaced. Further, the data shows views of AI vary by education level such that more educated folks tend to have a more positive view of AI. My own work explores this dynamic and challenges the broadly held conception that AI is more likely to affect lower skill work (i.e., the skills-biased technical change hypothesis).

There are many biases towards guessing how AI can or should be received and utilized. Again, communication scholars have been (and ought to continue) exploring how these assumptions, stereotypes, and biased perceptions translate into interaction with AI. As communication scholars, if we want to know how people communicate with AI, we must ask how people think about AI communication partners.

Takeaways for Communication Scholars

I could easily dive into more theories related to AI, but instead I want to focus our attention on something broader: the value of classic communication theory. These time-tested frameworks help us understand the emerging research gaps AI presents as people communicate more with it. We need to combine theoretical explanations, relying heavily on powerful existing frameworks, and modifying them to account for the novelty and complexity of HMC.

Consider expectancy violations theory, bandwagon heuristics, and frameworks emphasizing credibility and helpful cues. Each communication perspective offers insight into how people engage in HMC. Leveraging valuable and rich communication theory is the way to understand how people think, feel, and especially communicate with AI. I could go on, but dearest reader, I invite you to use your imagination and consider your own favorite communication theories. There are many theories that apply to the research gap I have shared here. And there are many pressing questions in your own area that deal with what AI means for communication.

It is no surprise that as people learn more about AI and use it more, their attitudes shift. For communication scholars, recognizing how these attitudes shape interactions with AI is crucial. In this short essay, I have outlined some of the key theories that help explain the “why” and “how” behind these intriguing research gaps. These communication frameworks offer us powerful tools to better understand—and perhaps even improve—our evolving relationship with AI.

Cameron W. Piercy, Ph.D. (he/him; cpiercy@ku.edu) is an Associate Professor of Communication Studies at the University of Kansas and the Founding Director of the Human-Machine Communication Lab (https://hmc.ku.edu)